The Pagoda Project

|

The Pagoda Project researches and develops cutting-edge software for implementing high-performance applications and software infrastructure under the Partitioned Global Address Space (PGAS) model. The project has been primarily funded by the Exascale Computing Project, and interacts with partner projects in industry, government and academia. |

Software

Pagoda Software Ecosystem

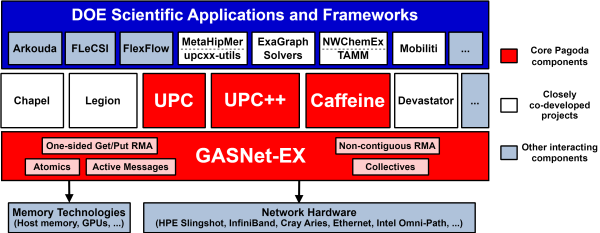

The Pagoda software ecosystem is built atop GASNet-EX, our high-performance communication portability layer. GASNet-EX supports a number of parallel programming models and frameworks developed by LBNL and other institutional partners. The Pagoda project additionally encompasses development of the UPC++ and Berkeley UPC programming model productivity layers, as well as the Caffeine Fortran parallel runtime.

GASNet-EX

GASNet is a language-independent, networking middleware layer that provides network-independent, high-performance communication primitives including Remote Memory Access (RMA) and Active Messages (AM). It has been used to implement parallel programming models and libraries such as UPC, UPC++, Co-Array Fortran, Legion, Chapel, and many others. The interface is primarily intended as a compilation target and for use by runtime library writers (as opposed to end users). The primary goals are high performance, interface portability, and expressiveness.

Overview Paper:

Dan Bonachea, Paul H. Hargrove, "GASNet-EX: A High-Performance, Portable Communication Library for Exascale", Languages and Compilers for Parallel Computing (LCPC'18), Salt Lake City, Utah, USA, October 11, 2018, LBNL-2001174, doi: 10.25344/S4QP4W

20th Anniversary Retrospective News Article:

Linda Vu,"Berkeley Lab’s Networking Middleware GASNet Turns 20: Now, GASNet-EX is Gearing Up for the Exascale Era", HPCWire (Lawrence Berkeley National Laboratory CS Area Communications), December 7, 2022, doi: 10.25344/S4BP4G

UPC++

UPC++ is a C++ library that supports Partitioned Global Address Space (PGAS) programming, and is designed to interoperate smoothly and efficiently with MPI, OpenMP, CUDA and AMTs. It leverages GASNet-EX to deliver low-overhead, fine-grained communication, including Remote Memory Access (RMA) and Remote Procedure Call (RPC).

Overview Paper:

John Bachan, Scott B. Baden, Steven Hofmeyr, Mathias Jacquelin, Amir Kamil, Dan Bonachea, Paul H. Hargrove, Hadia Ahmed, "UPC++: A High-Performance Communication Framework for Asynchronous Computation", 33rd IEEE International Parallel & Distributed Processing Symposium (IPDPS'19), Rio de Janeiro, Brazil, IEEE, May 2019, doi: 10.25344/S4V88H

Caffeine

Caffeine is a parallel runtime library that aims to support Fortran compilers by providing a programming-model-agnostic Parallel Runtime Interface for Fortran (PRIF) that can be implemented atop various communication libraries. Current work is on supporting the PRIF interface with communication using GASNet-EX.

Overview Paper:

Damian Rouson, Dan Bonachea, "Caffeine: CoArray Fortran Framework of Efficient Interfaces to Network Environments", Proceedings of the Eighth Annual Workshop on the LLVM Compiler Infrastructure in HPC (LLVM-HPC2022), Dallas, Texas, USA, IEEE, November 2022, doi: 10.25344/S4459B

Berkeley UPC

Unified Parallel C (UPC) is a PGAS extension of the C programming language designed for high performance computing on large-scale parallel machines. The language provides a uniform programming model for both shared and distributed memory hardware. Berkeley UPC is a portable, high-performance implementation of the UPC language using GASNet for communication.

Overview Paper:

Wei Chen, Dan Bonachea, Jason Duell, Parry Husbands, Costin Iancu, Katherine Yelick, "A Performance Analysis of the Berkeley UPC Compiler", Proceedings of the International Conference on Supercomputing (ICS) 2003, December 1, 2003, doi: 10.1145/782814.782825

Project Participants

Current Team Members

Current and Past Contributors

- Hadia Ahmed (bodo.ai)

- John Bachan (NVIDIA)

- Scott B. Baden (UCSD, INRIA, LBNL)

- Julian Bellavita (Cornell University)

- Rob Egan (JGI, LBNL)

- Marquita Ellis (IBM)

- Sayan Ghosh (PNNL)

- Max Grossman (Georgia Tech)

- Steven Hofmeyr (LBNL)

- Khaled Ibrahim (LBNL)

- Mathias Jacquelin (Cerebras Systems)

- Bryce Adelstein Lelbach (NVIDIA)

- Colin A. MacLean (NextSilicon)

- Alexander Pöppl (TUM)

- Brad Richardson (NASA)

- Hongzhang Shan

- Brian van Straalen (LBNL)

- Daniel Waters (NVIDIA)

- Samuel Williams (LBNL)

- Katherine Yelick (LBNL)

- Yili Zheng (Google)

Publications

The Pagoda project officially began in early 2017 to continue development of the UPC++ and GASNet PGAS communication libraries under a unified umbrella. In addition to work since 2017, the list below includes a subset of publications from earlier work on GASNet (pubs), UPC (pubs) and the predecessor to the UPC++ (pubs) library written by many of the same group members under previous project funding. Consult linked project pages for complete publication history.

2025

Damian Rouson, Zhe Bai, Dan Bonachea, Baboucarr Dibba, Ethan Gutmann, Katherine Rasmussen, David Torres, Jordan Welsman, Yunhao Zhang, "Cloud microphysics training and aerosol inference with the Fiats deep learning library", UCAR–SEA Improving Scientific Software Conference (ISS25), August 2025, doi: 10.25344/S4QS3J

This notebook presents two atmospheric sciences demonstration applications in the Fiats deep learning software repository. The first, train-cloud-microphysics, trains a neural-network cloud microphysics surrogate model that has been integrated into the Berkeley Lab fork of the Intermediate Complexity Atmospheric Research (ICAR) model. The second, infer-aerosol, performs parallel inference with an aerosol dynamics surrogate pretrained in PyTorch using data from the Energy Exascale Earth System Model (E3SM). This notebook presents the program statements involved in using Fiats for aerosol inference and microphysics training. In order to also give the interested reader direct experience with using Fiats for these purposes, the notebook details how to run two simpler example programs that serve as representative proxies for the demonstration applications. Both proxies are also example programs in the Fiats repository. The microphysics training proxy is a self-contained example requiring no input files. The aerosol inference proxy uses a pretrained aerosol model stored in the Fiats JavaScript Object Notation (JSON) file format and hyperlinked into this notebook for downloading, importing, and using to perform batch inference calculations with Fiats.

Brandon Cook, Damian Rouson, Dan Bonachea, "US04: Non-blocking Collective Subroutines", JTC1/SC22/WG5 ISO Fortran Standards document (WG5/N2245), June 2025,

Proposal for adding explicitly non-blocking collective subroutines to the worklist for Fortran 202Y.

Reinhold Bader, Dan Bonachea, HPC, "DIN1: Collectives over a specified team, req/spec/syntax/edits", INCITS/US Fortran Programming Language Standards Technical Committee (J3/25-127r1), June 2025,

This paper contains formal requirements, specifications, syntax and edits for Fortran 202Y proposal DIN1, collectives over a specified team.

Gary Klimowicz, Dan Bonachea, Patrick Fasano, Steve Lionel, "Formal specifications for the Fortran preprocessor (FPP)", INCITS/US Fortran Programming Language Standards Technical Committee (J3/25-142r2), June 2025,

Damian Rouson, Zhe Bai, Dan Bonachea, Kareem Ergawy, Ethan Gutmann, Michael Klemm, Katherine Rasmussen, Brad Richardson, Sameer Shende, David Torres, Yunhao Zhang, "Automatically parallelizing batch inference on deep neural networks using Fiats and Fortran 2023 `do concurrent`", Fifth International Workshop on Computational Aspects of Deep Learning (CADL), June 2025, doi: 10.25344/S4VG6T

This paper introduces novel programming strategies that leverage features of the Fortran 2023 standard of the International Standards Organization (ISO) to automatically parallelize computations on deep neural networks. The paper focuses on the interplay of object-oriented, parallel, and functional programming paradigms in the Fiats deep learning library. We demonstrate how several infrequently used language features play a role in enabling efficient, parallel execution. Specifically, the ability to explicitly declare that a procedure is pure facilitates inference in the context of the language’s loop-parallelism construct `do concurrent`. Also, explicitly prohibiting the overriding of a parent type’s type-bound procedures eliminates the need for dynamic dispatch in performance-critical code. Finally, this paper uses batch inference calculations on a neural network surrogate for atmospheric aerosol dynamics to demonstrate that LLVM Flang compiler’s automatic parallelization of `do concurrent` achieves roughly the same performance and scalability as achieved by OpenMP compiler directives. We also demonstrate that double-precision inference costs 37–72% longer runtime than default-real precision with most values in the range 57-60%.

Brandon Cook, Dan Bonachea, "Requirements for US20: Local Prefix Operation Intrinsics", INCITS/US Fortran Programming Language Standards Technical Committee (J3/25-145r1), June 2025,

Scan, or prefix reduction, operations are fundamental building blocks in parallel algorithms and data manipulation tasks. The SCAN and CO_SCAN proposal (J3/23-235r2) received "mixed support" at a previous meeting, and prospective work items, including prefix reduction operations, were "conditionally accepted" at the 2024 meeting, pending further discussion. They were again discussed at the February 2025 WG5 meeting, and subsequently promoted to "accepted" work item US20 via WG5 letter ballot in May 2025 (WG5/N-2239). The result of the WG5 vote was 20 yes 5 no and 1 undecided with several informative comments.

This document focuses on requirements exclusively for the local prefix reduction variant, refining previous concepts based on community and WG5 feedback. By focusing exclusively on the local variant our aim is to allow consideration independent of the closely related but distinct collective subroutines. We do however revisit use cases briefly for clarity as we are now addressing the two variants separately.

Brandon Cook, Dan Bonachea, "Requirements for US20 collective subroutines for prefix operations", INCITS/US Fortran Programming Language Standards Technical Committee (J3/25-144r1), June 2025,

Scan, or prefix reduction, operations are fundamental building blocks in parallel algorithms and data manipulation tasks. The original `SCAN` and `CO_SCAN` proposal received "mixed support" , and prefix reduction operations were subsequently "conditionally accepted" as a prospective work item for F202Y at the 2024 WG5 meeting, pending further discussion and refinement. They were again discussed at the February 2025 WG5 meeting, and subsequently promoted to "accepted" work item US20 via WG5 letter ballot in May 2025 (WG5/N-2239). The result of the WG5 vote was 20 yes 5 no and 1 undecided with several informative comments.

This paper focuses exclusively on requirements for the collective subroutine variant refining previous concepts based on community and WG5 feedback. Our aim is to allow consideration independent of the closely related but distinct local intrinsics. We briefly revisit use cases as we are now addressing the two variants separately.

Paul H. Hargrove, Dan Bonachea, "Investigation into the Performance Benefits of Exposing Network Backpressure in UPC++ and GASNet-EX", Lawrence Berkeley National Laboratory Technical Report, May 2025, LBNL 2001668, doi: 10.25344/S4088R

This document is a brief summary of the research, and supporting development efforts, conducted by the project "Investigation into Improving Dynamic Adaptivity to System-Level Asynchrony in UPC++". We tested the hypothesis "The UPC++ and GASNet-EX runtimes can expose information from the network stack that enables applications to dynamically adapt to congestion, improving total throughput". We present experimental results from both a microbenchmark and an application benchmark that support this hypothesis.

Katherine Rasmussen, Damian Rouson, Dan Bonachea, Julienne + Assert == Correctness-Checking for Functional Fortran, Improving Scientific Software Conference, April 2025, doi: 10.25344/S4401K

The agile software development practice of test-driven development (TDD) advocates unit testing as an essential driver of software design and construction. In TDD, tests of individual units of software (e.g., procedures) serve documentation and verification roles. As documentation, tests specify the behaviors required for code correctness. Executing a suite of tests verifies that the actual behaviors satisfy the documented requirements. As inspired by the Veggies and Garden unit testing frameworks for modern Fortran, the more lightweight Julienne framework uses the Template Method pattern to report serial or parallel test results in the form of a specification (https://go.lbl.gov/julienne). As such, Julienne’s test output names the test subject (e.g., a class or type-bound procedure), the expected behavior, the test outcome (pass or fail), and provides diagnostic information if a test fails.

The use of Julienne centers around users defining a test in the form of a non-abstract child type that extends Julienne’s abstract test_t derived type. The user’s child type thus inherits an obligation to define type-bound procedures that name the subject of the test and provide the test results. As a template method, test_t’s type-bound “report” procedure invokes the user’s procedures by referencing the aforementioned deferred bindings and reporting on the collective success or failure across multiple images (processes) in programs that use Fortran’s multi-image parallel programming features.

Working from the example test suite in the Julienne repository, attendees will learn how to write and run a simple test suite, including how to use Julienne’s string-handling for producing rich diagnostic information from a failing test. Attendees will also see examples of Julienne’s use in other Berkeley Lab software projects such as the Fiats deep learning library and Matcha T-cell motility simulator.

Attendees will also learn a functional programming pattern developed and used by the Berkeley Lab Fortran presenters. Functional programming centers around the definition of pure procedures that are free of side effects, including file input and output. To supplement the material on external verification via unit tests, this tutorial will also introduce our Assert utility library and Assert’s use for runtime correctness-checking inside procedures (https://go.lbl.gov/assert). Attendees will learn how Assert addresses a common reason developers cite for not writing pure procedures: a desire to produce diagnostic output when debugging code. We posit that most developers seek output to verify an expectation about data and that such expectations can be stated in assertions that take the form of logical expressions. Attendees will learn how Assert empowers developers to obtain rich, customized diagnostic information through character stop codes when an assertion fails, resulting in error termination. Attendees will also learn how to use Assert in such a way that guarantees zero runtime overhead by automatically eliminating assertions in production builds of user software.

Dan Bonachea, Katherine Rasmussen, Brad Richardson, Damian Rouson, "Caffeine: A parallel runtime library for supporting modern Fortran compilers", Journal of Open Source Software, edited by Daniel S. Katz, March 29, 2025, 10(107), doi: 10.21105/joss.07895

The Fortran programming language standard added features supporting single-program, multiple data (SPMD) parallel programming and loop parallelism beginning with Fortran 2008. In Fortran, SPMD programming involves the creation of a fixed number of images (instances) of a program that execute asynchronously in shared or distributed memory, except where a program uses specific synchronization mechanisms. Fortran’s “coarray’’ distributed data structures offer a subscripted, multidimensional array notation defining a partitioned global address space (PGAS). One image can use this notation for one-sided access to another image’s slice of a coarray.

The CoArray Fortran Framework of Efficient Interfaces to Network Environments (Caffeine) provides a runtime library that supports Fortran’s SPMD features. Caffeine implements inter-process communication by building atop the GASNet-EX exascale networking middleware library. Caffeine is the first implementation of the compiler- and runtime-agnostic Parallel Runtime Interface for Fortran (PRIF) specification. Any compiler that targets PRIF can use any runtime that supports PRIF. Caffeine supports researching the novel approach of writing most of a compiler’s parallel runtime library in the language being compiled: Caffeine is primarily implemented using Fortran’s non-parallel features, with a thin C-language layer that invokes the external GASNet-EX communication library. Exploring this approach in open source lowers a barrier to contributions from the compiler’s users: Fortran programmers. Caffeine also facilitates research such as investigating various optimization opportunities that exploit specific hardware such as shared memory or specific interconnects.

Damian Rouson, What Happens to a Dream Deferred? Chasing Language-Based Parallel Programming for HPC and AI, SIAM Conference on Computational Science and Engineering (CSE25), March 5, 2025, doi: 10.25344/S47S36

In 1951, Harlem Renaissance poet Langston Hughes asked this talk's titular question at the outset of a poem entitled "Harlem." Six years later, IBM mathematician John Backus developed Fortran, the world's first widely used high-level programming language. Backus later explored functional programming and highlighted the functional style in his Turing Award lecture in 1977, a year that also demarcates what one might consider the end of the classical era of Fortran. Building on a vision the presenter first conceived around the turn of the 21st century while teaching in Harlem, this talk will demonstrate how Fortran 2023 can finally deliver on Backus's functional programming dream in traditional high-performance computing (HPC) domains such as partial differential equation (PDE) solvers and in emerging domains such as artificial intelligence (AI). For PDE solvers, the talk will describe language facilities for asynchronously evaluating expressions that apply discrete, parallel, purely-functional differential operators to software abstractions that model continuous mathematical abstractions. For AI, the talk will demonstrate that Fortran's native concurrent loop iterations can combine with side-effect-free, pure procedures to facilitate automatically parallelizing deep-learning inference and training algorithms on processors and accelerators. The talk will provide updates on an ongoing effort by Berkeley Lab's Fortran team to realize this dream by through our work at multiple levels in the software stack, including applications, compiler runtime libraries, and networking middleware. Along the way, the talk will highlight ways in which programs promoting inclusivity in science facilitated significant aspects of the presented work.

SIAM Conference on Computational Science and Engineering (CSE25)

Dan Bonachea, HPC, "F202Y feature request: collectives over a specified team", INCITS/US Fortran Programming Language Standards Technical Committee (J3/25-125r1), February 2025,

As of Fortran 2023, the collective subroutine intrinsics (CO_BROADCAST, CO_MAX, CO_MIN, CO_REDUCE, and CO_SUM) may only be executed over the current team, as defined by the CHANGE TEAM construct. This becomes very awkward when one needs to execute such a collective over an ancestor team; because there is no way to directly express that without closing the CHANGE TEAM construct, and invoking END TEAM may have undesired side-effects such as deallocating team-specific coarrays. It would also be convenient to allow collectives directly over a child team without forcing the synchronization side effects associated with a CHANGE TEAM to that child team.

The collective subroutines of Fortran should support execution in a specified team that is not the current team.

Paper PASSED by roll call vote at INCITS/US Fortran Programming Language Standards Technical Committee meeting #235

Gary Klimowicz, Dan Bonachea, Aury Shafran, "Fortran preprocessor requirements", INCITS/US Fortran Programming Language Standards Technical Committee (J3/25-114r2), February 2025,

Many existing Fortran projects make extensive use of C preprocessor directives and macro expansion, despite the lack of an FPP standard. This is usually done to tailor the code to specific environments, such as target compilers or machines. Unfortunately, more complex use cases fail to be portable between different implementations. This is enough of a problem that WG 5 raised this as the number 2 issue to address in Fortran 202y, behind generics. This is not a new problem, as evidenced by the J3 discussions from the mid 1990s. The introduction of CoCo in Fortran 95 did not solve the problem, either, because it was not a mandatory part of the standard and because it was not compatible with the preprocessor syntax used by many existing Fortran projects. This document attempts to define the requirements for a mandatory Fortran preprocessor based on the preprocessor syntax already in common use today. The guiding principle is to promote Fortran program portability by defining consistent syntax and semantics of a useful subset of CPP. Some FPP behavior will be slightly different from CPP, in order to accommodate some Fortran idiosyncrasies. A major overarching goal of this effort is to standardize de facto current practice for preprocessing in Fortran compilers and code. It is the standard's responsibility to standardize syntax in order to settle minor divergences that have arisen amongst pre-standard FPP implementations, to the detriment of portability for end users.

Paper PASSED by unanimous consent at INCITS/US Fortran Programming Language Standards Technical Committee meeting #235

2024

Dan Bonachea, Katherine Rasmussen, Brad Richardson, Damian Rouson, "Parallel Runtime Interface for Fortran (PRIF) Specification, Revision 0.5", Lawrence Berkeley National Laboratory Tech Report, December 2024, LBNL 2001636, doi: 10.25344/S4CG6G

This document specifies an interface to support the parallel features of Fortran, named the Parallel Runtime Interface for Fortran (PRIF). PRIF is a proposed solution in which the runtime library is primarily responsible for implementing coarray allocation, deallocation and accesses, image synchronization, atomic operations, events, teams and collective subroutines. In this interface, the compiler is responsible for transforming the invocation of Fortran-level parallel features into procedure calls to the necessary PRIF subroutines. The interface is designed for portability across shared- and distributed-memory machines, different operating systems, and multiple architectures. Implementations of this interface are intended as an augmentation for the compiler's own runtime library. With an implementation-agnostic interface, alternative parallel runtime libraries may be developed that support the same interface. One benefit of this approach is the ability to vary the communication substrate. A central aim of this document is to define a parallel runtime interface in standard Fortran syntax, which enables us to leverage Fortran to succinctly express various properties of the procedure interfaces, including argument attributes.

Dan Bonachea, Katherine Rasmussen, Brad Richardson, Damian Rouson, "Parallel Runtime Interface for Fortran (PRIF): A Multi-Image Solution for LLVM Flang", Tenth Workshop on the LLVM Compiler Infrastructure in HPC (LLVM-HPC2024), Atlanta, Georgia, USA, IEEE, November 2024, doi: 10.25344/S4N017

- Download File: LLVM-HPC24_PRIF_Slides.pdf (pdf: 975 KB)

Fortran compilers that provide support for Fortran’s native parallel features often do so with a runtime library that depends on details of both the compiler implementation and the communication library, while others provide limited or no support at all. This paper introduces a new generalized interface that is both compiler- and runtime-library-agnostic, providing flexibility while fully supporting all of Fortran’s parallel features. The Parallel Runtime Interface for Fortran (PRIF) was developed to be portable across shared- and distributed-memory systems, with varying operating systems, toolchains and architectures. It achieves this by defining a set of Fortran procedures corresponding to each of the parallel features defined in the Fortran standard that may be invoked by a Fortran compiler and implemented by a runtime library. PRIF aims to be used as the solution for LLVM Flang to provide parallel Fortran support. This paper also briefly describes our PRIF prototype implementation: Caffeine.

Damian Rouson, Baboucarr Dibba, Katherine Rasmussen, Brad Richardson, David Torres, Yunhao Zhang, Ethan Gutmann, Kareem Ergawy, Michael Klemm, Sameer Shende, Just Write Fortran: Experiences with a Language-Based Alternative to MPI+X, Talk at IEEE/ACM Parallel Applications Workshop, Alternatives To MPI+X (PAW-ATM), November 2024, doi: 10.25344/S4H88D

Fortran 2023, with its "do concurrent" and coarray parallel programming features, displaces many uses of extra-language parallel programming models such as MPI, OpenMP, and OpenACC. The Cray, Intel, LFortran, LLVM, and NVIDIA compilers automatically parallelize do concurrent in shared memory. The Cray, Intel, and GNU compilers support coarrays in shared- and distributed-memory, while the NAG compiler supports coarrays in shared memory. Thus, language-based parallelism is emerging as a portable alternative to MPI+X.

This talk will present experiences with automatic "do concurrent" parallelization in the deep learning library Inference-Engine and coarray communication in the Intermediate Complexity Atmospheric Research (ICAR), respectively.

Katherine Rasmussen, Damian Rouson, Dan Bonachea, Brad Richardson, "A Full-Stack Exploration of Language-Based Parallelism in Fortran 2023", Poster at CARLA2024: Latin America High Performance Computing Conference, September 30, 2024, doi: 10.25344/S4RP5K

This poster explores native parallel features in Fortran 2023 through the lens of supporting applications with libraries, compilers, and parallel runtimes. The language revision informally named Fortran 2008 introduced parallelism in the form of Single Program Multiple Data (SPMD) execution with two broad feature sets: (1) loop-level parallelism via do concurrent and (2) a Partitioned Global Address Space (PGAS) comprised of distributed “coarray” data structures. Fortran’s native parallelism has demonstrated high performance [1] and reduced the burden of inserting what sometimes amounts to more directives than code. Several compilers support both feature sets, typically by translating do concurrent into serial do loops annotated by parallel directives and by translating SPMD/PGAS features into direct calls to a communication library. Our research focuses primarily on two questions: (1) can the compiler’s parallel runtime library be developed in the language being compiled (Fortran) and (2) can we define an interface to the runtime that liberates compilers from being hardwired to one runtime and vice versa. We are answering these questions by developing the Parallel Runtime Interface for Fortran (PRIF) [2] and the Co-Array Fortran Framework of Efficient Interfaces to Network Environments (Caffeine) [3]. Caffeine is initially targeting adoption by LLVM Flang, a new open-source Fortran compiler developed by a broad community in industry, academia, and government labs. We are also exploring the use of these features in Inference-Engine, a deep learning library designed to facilitate neural network training and inference for high-performance computing applications written in modern Fortran.

Leyba K, Hofmeyr S, Forrest S, Cannon J, Moses M, "SIMCoV-GPU: Accelerating an Agent-Based Model for Exascale", HPDC '24, August 30, 2024, doi: 10.1145/3625549.3658692

Dan Bonachea, Katherine Rasmussen, Brad Richardson, Damian Rouson, "Parallel Runtime Interface for Fortran (PRIF) Specification, Revision 0.4", Lawrence Berkeley National Laboratory Tech Report, July 12, 2024, LBNL 2001604, doi: 10.25344/S4WG64

This document specifies an interface to support the parallel features of Fortran, named the Parallel Runtime Interface for Fortran (PRIF). PRIF is a proposed solution in which the runtime library is responsible for coarray allocation, deallocation and accesses, image synchronization, atomic operations, events, and teams. In this interface, the compiler is responsible for transforming the invocation of Fortran-level parallel features into procedure calls to the necessary PRIF procedures. The interface is designed for portability across shared- and distributed-memory machines, different operating systems, and multiple architectures. Implementations of this interface are intended as an augmentation for the compiler’s own runtime library. With an implementation-agnostic interface, alternative parallel runtime libraries may be developed that support the same interface. One benefit of this approach is the ability to vary the communication substrate. A central aim of this document is to define a parallel runtime interface in standard Fortran syntax, which enables us to leverage Fortran to succinctly express various properties of the procedure interfaces, including argument attributes.

Dan Bonachea, Katherine Rasmussen, Brad Richardson, Damian Rouson, Parallel Runtime Interface for Fortran (PRIF): A Compiler/Runtime-Library Agnostic Interface to Support the Parallel Features of Fortran 2023, Platform for Advanced Scientific Computing (PASC) Modern Fortran Minisymposium, June 5, 2024,

- Download File: PRIF-PASC24.pdf (pdf: 1.6 MB)

Fortran 2023 natively supports single-program, multiple-data parallel programming with a partitioned global address space and collective subroutines, synchronization, atomics, locks, and more. Each of the four actively developed compilers that support Fortran’s parallel features uses its own parallel runtime library. The Parallel Runtime Interface for Fortran (PRIF) proposes to liberate compiler development from reliance on a single runtime and empower runtime developers to support more than one compiler. PRIF also aims to broaden the community of runtime developers to include the Fortran compiler’s users: Fortran programmers. PRIF does so by specifying the interface in Fortran, which makes it attractive to write the parallel runtime library in Fortran. Additionally, PRIF has been designed to be portable across both shared and distributed memory, varying architectures, as well as different operating systems. In this talk, I will describe the motivation behind the development of PRIF, describe the design of the interface itself and the benefits of adopting it. I will also provide a brief status report on the first PRIF implementation: Caffeine.

Damian Rouson, What Happens to a Dream Deferred? Chasing Automatic Offloading in Fortran 2023, Keynote Talk at the Nineteenth International Workshop on Automatic Performance Tuning (iWAPT 2024), May 31, 2024,

- Download File: iWAPT-2024-Keynote.pdf (pdf: 6.7 MB)

In 1951, Harlem Renaissance poet Langston Hughes asked this talk's titular question at the outset of a poem entitled "Harlem." Six years later, IBM mathematician John Backus developed Fortran, the world's first widely used high-level programming language. Backus went on to explore functional programming and to highlight the functional style in his Turing Award lecture in 1977, a year that also demarcates what one might consider the end of the classical era of Fortran. This talk will demonstrate how modern Fortran began to deliver on Backus's functional programming dream, starting with pure procedures in the 1995 standard. The talk will further demonstrate how this style culminated in a powerful and flexible facility for expressing independent iterations via the "do concurrent" construct, which the Fortran standard committee included in Fortran 2008 with the intention to facilitate automatic Graphics Processing Unit (GPU) programming. Fortran 2008 was published in 2010, but it took another decade for compilers to deliver on the promise of automatic GPU offloading. This talk will detail the trials and tribulations of Berkeley Lab's Fortran team in chasing the automatic offloading dream in our Inference-Engine deep learning library and Matcha high-performance computing (HPC) application.

Dan Bonachea, Paul H. Hargrove, "GASNet-EX Specification Collection, Revision 2024.5.0", Lawrence Berkeley National Laboratory Tech Report, May 2024, LBNL 2001595, doi: 10.25344/S4160B

GASNet-EX is a portable, open-source, high-performance communication library designed to efficiently support the networking requirements of PGAS runtime systems and other alternative models in emerging exascale systems. It provides network-independent, high-performance communication primitives including Remote Memory Access (RMA) and Active Messages (AM). GASNet-EX is an evolution of the popular GASNet communication system, building upon over 20 years of lessons learned, and the primary goals are high performance, interface portability, and expressiveness. The library has been used to implement parallel programming models and libraries such as UPC, UPC++, Fortran coarrays, Legion, Chapel, and many others.

This anthology collects together the four separate volumes that currently comprise the GASNet-EX specification, as of the 2024.5.0 release of GASNet-EX.

Dan Bonachea, Katherine Rasmussen, Brad Richardson, Damian Rouson, "Parallel Runtime Interface for Fortran (PRIF) Specification, Revision 0.3", Lawrence Berkeley National Laboratory Tech Report, May 3, 2024, LBNL 2001590, doi: 10.25344/S4501W

This document specifies an interface to support the parallel features of Fortran, named the Parallel Runtime Interface for Fortran (PRIF). PRIF is a proposed solution in which the runtime library is responsible for coarray allocation, deallocation and accesses, image synchronization, atomic operations, events, and teams. In this interface, the compiler is responsible for transforming the invocation of Fortran-level parallel features into procedure calls to the necessary PRIF procedures. The interface is designed for portability across shared- and distributed-memory machines, different operating systems, and multiple architectures. Implementations of this interface are intended as an augmentation for the compiler’s own runtime library. With an implementation-agnostic interface, alternative parallel runtime libraries may be developed that support the same interface. One benefit of this approach is the ability to vary the communication substrate. A central aim of this document is to define a parallel runtime interface in standard Fortran syntax, which enables us to leverage Fortran to succinctly express various properties of the procedure interfaces, including argument attributes.

2023

Damian Rouson, Brad Richardson, Dan Bonachea, Katherine Rasmussen, "Parallel Runtime Interface for Fortran (PRIF) Design Document, Revision 0.2", Lawrence Berkeley National Laboratory Tech Report, December 20, 2023, LBNL 2001563, doi: 10.25344/S4DG6S

This design document proposes an interface to support the parallel features of Fortran, named the Parallel Runtime Interface for Fortran (PRIF). PRIF is a proposed solution in which the runtime library is responsible for coarray allocation, deallocation and accesses, image synchronization, atomic operations, events, and teams. In this interface, the compiler is responsible for transforming the invocation of Fortran-level parallel features into procedure calls to the necessary PRIF procedures. The interface is designed for portability across shared- and distributed-memory machines, different operating systems, and multiple architectures. Implementations of this interface are intended as an augmentation for the compiler’s own runtime library. With an implementation-agnostic interface, alternative parallel runtime libraries may be developed that support the same interface. One benefit of this approach is the ability to vary the communication substrate. A central aim of this document is to define a parallel runtime interface in standard Fortran syntax, which enables us to leverage Fortran to succinctly express various properties of the procedure interfaces, including argument attributes.

Dan Bonachea, Amir Kamil, "UPC++ v1.0 Specification, Revision 2023.9.0", Lawrence Berkeley National Laboratory Tech Report LBNL-2001561, December 2023, doi: 10.25344/S4J592

UPC++ is a C++ library providing classes and functions that support Partitioned Global Address Space (PGAS) programming. The key communication facilities in UPC++ are one-sided Remote Memory Access (RMA) and Remote Procedure Call (RPC). All communication operations are syntactically explicit and default to non-blocking; asynchrony is managed through the use of futures, promises and continuation callbacks, enabling the programmer to construct a graph of operations to execute asynchronously as high-latency dependencies are satisfied. A global pointer abstraction provides system-wide addressability of shared memory, including host and accelerator memories. The parallelism model is primarily process-based, but the interface is thread-safe and designed to allow efficient and expressive use in multi-threaded applications. The interface is designed for extreme scalability throughout, and deliberately avoids design features that could inhibit scalability.

John Bachan, Scott B. Baden, Dan Bonachea, Johnny Corbino, Max Grossman, Paul H. Hargrove, Steven Hofmeyr, Mathias Jacquelin, Amir Kamil, Brian van Straalen, Daniel Waters, "UPC++ v1.0 Programmer’s Guide, Revision 2023.9.0", Lawrence Berkeley National Laboratory Tech Report LBNL-2001560, December 2023, doi: 10.25344/S4P01J

UPC++ is a C++ library that supports Partitioned Global Address Space (PGAS) programming. It is designed for writing efficient, scalable parallel programs on distributed-memory parallel computers. The key communication facilities in UPC++ are one-sided Remote Memory Access (RMA) and Remote Procedure Call (RPC). The UPC++ control model is single program, multiple-data (SPMD), with each separate constituent process having access to local memory as it would in C++. The PGAS memory model additionally provides one-sided RMA communication to a global address space, which is allocated in shared segments that are distributed over the processes. UPC++ also features Remote Procedure Call (RPC) communication, making it easy to move computation to operate on data that resides on remote processes. UPC++ was designed to support exascale high-performance computing, and the library interfaces and implementation are focused on maximizing scalability. In UPC++, all communication operations are syntactically explicit, which encourages programmers to consider the costs associated with communication and data movement. Moreover, all communication operations are asynchronous by default, encouraging programmers to seek opportunities for overlapping communication latencies with other useful work. UPC++ provides expressive and composable abstractions designed for efficiently managing aggressive use of asynchrony in programs. Together, these design principles are intended to enable programmers to write applications using UPC++ that perform well even on hundreds of thousands of cores.

Julian Bellavita, Mathias Jacquelin, Esmond G. Ng, Dan Bonachea, Johnny Corbino, Paul H. Hargrove, "symPACK: A GPU-Capable Fan-Out Sparse Cholesky Solver", 2023 IEEE/ACM Parallel Applications Workshop, Alternatives To MPI+X (PAW-ATM'23), ACM, November 13, 2023, doi: 10.1145/3624062.3624600

Sparse symmetric positive definite systems of equations are ubiquitous in scientific workloads and applications. Parallel sparse Cholesky factorization is the method of choice for solving such linear systems. Therefore, the development of parallel sparse Cholesky codes that can efficiently run on today’s large-scale heterogeneous distributed-memory platforms is of vital importance. Modern supercomputers offer nodes that contain a mix of CPUs and GPUs. To fully utilize the computing power of these nodes, scientific codes must be adapted to offload expensive computations to GPUs.

We present symPACK, a GPU-capable parallel sparse Cholesky solver that uses one-sided communication primitives and remote procedure calls provided by the UPC++ library. We also utilize the UPC++ "memory kinds" feature to enable efficient communication of GPU-resident data. We show that on a number of large problems, symPACK outperforms comparable state-of-the-art GPU-capable Cholesky factorization codes by up to 14x on the NERSC Perlmutter supercomputer.

Michelle Mills Strout, Damian Rouson, Amir Kamil, Dan Bonachea, Jeremiah Corrado, Paul H. Hargrove, Introduction to High-Performance Parallel Distributed Computing using Chapel, UPC++ and Coarray Fortran, Tutorial at the International Conference for High Performance Computing, Networking, Storage, and Analysis (SC23), November 12, 2023,

A majority of HPC system users utilize scripting languages such as Python to prototype their computations, coordinate their large executions, and analyze the data resulting from their computations. Python is great for these many uses, but it frequently falls short when significantly scaling up the amount of data and computation, as required to fully leverage HPC system resources. In this tutorial, we show how example computations such as heat diffusion, k-mer counting, file processing, and distributed maps can be written to efficiently leverage distributed computing resources in the Chapel, UPC++, and Fortran parallel programming models.

The tutorial is targeted for users with little-to-no parallel programming experience, but everyone is welcome. A partial differential equation example will be demonstrated in all three programming models. That example and others will be provided to attendees in a virtual environment. Attendees will be shown how to compile and run these programming examples, and the virtual environment will remain available to attendees throughout the conference, along with Slack-based interactive tech support.

Come join us to learn about some productive and performant parallel programming models!

A. Dubey, T. Ben-Nun, B. L. Chamberlain, B. R. de Supinski, D. Rouson, "Performance on HPC Platforms Is Possible Without C++", Computing in Science & Engineering, September 2023, 25 (5):48-52, doi: 10.1109/MCSE.2023.3329330

Computing at large scales has become extremely challenging due to increasing heterogeneity in both hardware and software. More and more scientific workflows must tackle a range of scales and use machine learning and AI intertwined with more traditional numerical modeling methods, placing more demands on computational platforms. These constraints indicate a need to fundamentally rethink the way computational science is done and the tools that are needed to enable these complex workflows. The current set of C++-based solutions may not suffice, and relying exclusively upon C++ may not be the best option, especially because several newer languages and boutique solutions offer more robust design features to tackle the challenges of heterogeneity. In June 2023, we held a mini symposium that explored the use of newer languages and heterogeneity solutions that are not tied to C++ and that offer options beyond template metaprogramming and Parallel. For for performance and portability. We describe some of the presentations and discussion from the mini symposium in this article.

"Pagoda Updates PGAS Programming With Scalable Data Structures And Aggressively Asynchronous Communication", Rob Farber, Exascale Computing Project News, August 28, 2023, doi: 10.25344/S4SP4H

Riley R, Bowers RM, Camargo AP, Campbell A, Egan R, Eloe-Fadrosh EA, Foster B, Hofmeyr S, Huntemann M, Kellom M, Kimbrel JA, Oliker L, Yelick K, Pett-Ridge J, Salamov A, Varghese NJ, Clum A, "Terabase-Scale Coassembly of a Tropical Soil Microbiome", Microbiology Spectrum, August 17, 2023, doi: 10.1128/SPECTRUM.00200-23

Michelle Mills Strout, Damian Rouson, Amir Kamil, Dan Bonachea, Jeremiah Corrado, Paul H. Hargrove, Introduction to High-Performance Parallel Distributed Computing using Chapel, UPC++ and Coarray Fortran (CUF23), ECP/NERSC/OLCF Tutorial, July 2023,

A majority of HPC system users utilize scripting languages such as Python to prototype their computations, coordinate their large executions, and analyze the data resulting from their computations. Python is great for these many uses, but it frequently falls short when significantly scaling up the amount of data and computation, as required to fully leverage HPC system resources. In this tutorial, we show how example computations such as heat diffusion, k-mer counting, file processing, and distributed maps can be written to efficiently leverage distributed computing resources in the Chapel, UPC++, and Fortran parallel programming models. This tutorial should be accessible to users with little-to-no parallel programming experience, and everyone is welcome. A partial differential equation example will be demonstrated in all three programming models along with performance and scaling results on big machines. That example and others will be provided in a cloud instance and Docker container. Attendees will be shown how to compile and run these programming examples, and provided opportunities to experiment with different parameters and code alternatives while being able to ask questions and share their own observations. Come join us to learn about some productive and performant parallel programming models!

Secondary tutorial sites by event sponsors:

Paul H. Hargrove, PGAS Programming Models: My 20-year Perspective, Keynote for 10th Annual Chapel Implementers and Users Workshop (CHIUW 2023), June 2, 2023, doi: 10.25344/S4K59C

Paul H. Hargrove has been involved in the world of Partitioned Global Address Space (PGAS) programming models since 1999, before he knew such a thing existed. Early involvement in the GASNet communications library as used in implementations of UPC, Titanium and Co-array Fortran convinced Paul that one could have productivity and performance without sacrificing one for the other. Since then he has been among the apostates who work to overturn the belief that message-passing is the only (or best) way to program for High-Performance Computing (HPC). Paul has been fortunate to witness the history of the PGAS community through several rare opportunities, including interactions made possible by the wide adoption of GASNet and through operating a PGAS booth at the annual SC conferences from 2007 to 2017. In this talk, Paul will share some highlights of his experiences across 24 years of PGAS history. Among these is the DARPA High Productivity Computing Systems (HPCS) project which helped give birth to Chapel.

Dan Bonachea, Amir Kamil, "UPC++ v1.0 Specification, Revision 2023.3.0", Lawrence Berkeley National Laboratory Tech Report, March 31, 2023, LBNL 2001516, doi: 10.25344/S46W2J

UPC++ is a C++ library providing classes and functions that support Partitioned Global Address Space (PGAS) programming. The key communication facilities in UPC++ are one-sided Remote Memory Access (RMA) and Remote Procedure Call (RPC). All communication operations are syntactically explicit and default to non-blocking; asynchrony is managed through the use of futures, promises and continuation callbacks, enabling the programmer to construct a graph of operations to execute asynchronously as high-latency dependencies are satisfied. A global pointer abstraction provides system-wide addressability of shared memory, including host and accelerator memories. The parallelism model is primarily process-based, but the interface is thread-safe and designed to allow efficient and expressive use in multi-threaded applications. The interface is designed for extreme scalability throughout, and deliberately avoids design features that could inhibit scalability.

John Bachan, Scott B. Baden, Dan Bonachea, Johnny Corbino, Max Grossman, Paul H. Hargrove, Steven Hofmeyr, Mathias Jacquelin, Amir Kamil, Brian van Straalen, Daniel Waters, "UPC++ v1.0 Programmer’s Guide, Revision 2023.3.0", Lawrence Berkeley National Laboratory Tech Report, March 30, 2023, LBNL 2001517, doi: 10.25344/S43591

UPC++ is a C++ library that supports Partitioned Global Address Space (PGAS) programming. It is designed for writing efficient, scalable parallel programs on distributed-memory parallel computers. The key communication facilities in UPC++ are one-sided Remote Memory Access (RMA) and Remote Procedure Call (RPC). The UPC++ control model is single program, multiple-data (SPMD), with each separate constituent process having access to local memory as it would in C++. The PGAS memory model additionally provides one-sided RMA communication to a global address space, which is allocated in shared segments that are distributed over the processes. UPC++ also features Remote Procedure Call (RPC) communication, making it easy to move computation to operate on data that resides on remote processes.

UPC++ was designed to support exascale high-performance computing, and the library interfaces and implementation are focused on maximizing scalability. In UPC++, all communication operations are syntactically explicit, which encourages programmers to consider the costs associated with communication and data movement. Moreover, all communication operations are asynchronous by default, encouraging programmers to seek opportunities for overlapping communication latencies with other useful work. UPC++ provides expressive and composable abstractions designed for efficiently managing aggressive use of asynchrony in programs. Together, these design principles are intended to enable programmers to write applications using UPC++ that perform well even on hundreds of thousands of cores.

Johnny Corbino, UPC++’s Crucial Role in Quantum Chemistry, UPC++ Community BOF Virtual Symposium, February 16, 2023, doi: 10.25344/S4XG6F

Paul H. Hargrove, Dan Bonachea, Johnny Corbino, Amir Kamil, Colin A. MacLean, Damian Rouson, Daniel Waters, "UPC++ and GASNet: PGAS Support for Exascale Apps and Runtimes (ECP'23)", Poster at Exascale Computing Project (ECP) Annual Meeting 2023, January 2023,

The Pagoda project is developing a programming system to support HPC application development using the Partitioned Global Address Space (PGAS) model. The first component is GASNet-EX, a portable, high-performance, global-address-space communication library. The second component is UPC++, a C++ template library. Together, these libraries enable agile, lightweight communication such as arises in irregular applications, libraries and frameworks running on exascale systems.

GASNet-EX is a portable, high-performance communications middleware library which leverages hardware support to implement Remote Memory Access (RMA) and Active Message communication primitives. GASNet-EX supports a broad ecosystem of alternative HPC programming models, including UPC++, Legion, Chapel and multiple implementations of UPC and Fortran Coarrays. GASNet-EX is implemented directly over the native APIs for networks of interest in HPC. The tight semantic match of GASNet-EX APIs to the client requirements and hardware capabilities often yields better performance than competing libraries.

UPC++ provides high-level productivity abstractions appropriate for Partitioned Global Address Space (PGAS) programming such as: remote memory access (RMA), remote procedure call (RPC), support for accelerators (e.g. GPUs), and mechanisms for aggressive asynchrony to hide communication costs. UPC++ implements communication using GASNet-EX, delivering high performance and portability from laptops to exascale supercomputers. HPC application software using UPC++ includes: MetaHipMer2 metagenome assembler, SIMCoV viral propagation simulation, NWChemEx TAMM, and graph computation kernels from ExaGraph.

2022

"Berkeley Lab’s Networking Middleware GASNet Turns 20: Now, GASNet-EX is Gearing Up for the Exascale Era", Linda Vu, HPCWire (Lawrence Berkeley National Laboratory CS Area Communications), December 7, 2022, doi: 10.25344/S4BP4G

GASNet Celebrates 20th Anniversary

For 20 years, Berkeley Lab’s GASNet has been fueling developers’ ability to tap the power of massively parallel supercomputers more effectively. The middleware was recently upgraded to support exascale scientific applications.

Katherine Rasmussen, Damian Rouson, Naje George, Dan Bonachea, Hussain Kadhem, Brian Friesen, "Agile Acceleration of LLVM Flang Support for Fortran 2018 Parallel Programming", Research Poster at the International Conference for High Performance Computing, Networking, Storage, and Analysis (SC22), November 2022, doi: 10.25344/S4CP4S

The LLVM Flang compiler ("Flang") is currently Fortran 95 compliant, and the frontend can parse Fortran 2018. However, Flang does not have a comprehensive 2018 test suite and does not fully implement the static semantics of the 2018 standard. We are investigating whether agile software development techniques, such as pair programming and test-driven development (TDD), can help Flang to rapidly progress to Fortran 2018 compliance. Because of the paramount importance of parallelism in high-performance computing, we are focusing on Fortran’s parallel features, commonly denoted “Coarray Fortran.” We are developing what we believe are the first exhaustive, open-source tests for the static semantics of Fortran 2018 parallel features, and contributing them to the LLVM project. A related effort involves writing runtime tests for parallel 2018 features and supporting those tests by developing a new parallel runtime library: the CoArray Fortran Framework of Efficient Interfaces to Network Environments (Caffeine).

Paul H. Hargrove, Dan Bonachea, "GASNet-EX RMA Communication Performance on Recent Supercomputing Systems", 5th Annual Parallel Applications Workshop, Alternatives To MPI+X (PAW-ATM'22), November 2022, doi: 10.25344/S40C7D

Partitioned Global Address Space (PGAS) programming models, typified by systems such as Unified Parallel C (UPC) and Fortran coarrays, expose one-sided Remote Memory Access (RMA) communication as a key building block for High Performance Computing (HPC) applications. Architectural trends in supercomputing make such programming models increasingly attractive, and newer, more sophisticated models such as UPC++, Legion and Chapel that rely upon similar communication paradigms are gaining popularity.

GASNet-EX is a portable, open-source, high-performance communication library designed to efficiently support the networking requirements of PGAS runtime systems and other alternative models in emerging exascale machines. The library is an evolution of the popular GASNet communication system, building upon 20 years of lessons learned. We present microbenchmark results which demonstrate the RMA performance of GASNet-EX is competitive with MPI implementations on four recent, high-impact, production HPC systems. These results are an update relative to previously published results on older systems. The networks measured here are representative of hardware currently used in six of the top ten fastest supercomputers in the world, and all of the exascale systems on the U.S. DOE road map.

Damian Rouson, Dan Bonachea, "Caffeine: CoArray Fortran Framework of Efficient Interfaces to Network Environments", Proceedings of the Eighth Annual Workshop on the LLVM Compiler Infrastructure in HPC (LLVM-HPC2022), Dallas, Texas, USA, IEEE, November 2022, doi: 10.25344/S4459B

This paper provides an introduction to the CoArray Fortran Framework of Efficient Interfaces to Network Environments (Caffeine), a parallel runtime library built atop the GASNet-EX exascale networking library. Caffeine leverages several non-parallel Fortran features to write type- and rank-agnostic interfaces and corresponding procedure definitions that support parallel Fortran 2018 features, including communication, collective operations, and related services. One major goal is to develop a runtime library that can eventually be considered for adoption by LLVM Flang, enabling that compiler to support the parallel features of Fortran. The paper describes the motivations behind Caffeine's design and implementation decisions, details the current state of Caffeine's development, and previews future work. We explain how the design and implementation offer benefits related to software sustainability by lowering the barrier to user contributions, reducing complexity through the use of Fortran 2018 C-interoperability features, and high performance through the use of a lightweight communication substrate.

Dan Bonachea, Amir Kamil, "UPC++ v1.0 Specification, Revision 2022.9.0", Lawrence Berkeley National Laboratory Tech Report, September 30, 2022, LBNL 2001480, doi: 10.25344/S4M59P

UPC++ is a C++ library providing classes and functions that support Partitioned Global Address Space (PGAS) programming. The key communication facilities in UPC++ are one-sided Remote Memory Access (RMA) and Remote Procedure Call (RPC). All communication operations are syntactically explicit and default to non-blocking; asynchrony is managed through the use of futures, promises and continuation callbacks, enabling the programmer to construct a graph of operations to execute asynchronously as high-latency dependencies are satisfied. A global pointer abstraction provides system-wide addressability of shared memory, including host and accelerator memories. The parallelism model is primarily process-based, but the interface is thread-safe and designed to allow efficient and expressive use in multi-threaded applications. The interface is designed for extreme scalability throughout, and deliberately avoids design features that could inhibit scalability.

John Bachan, Scott B. Baden, Dan Bonachea, Johnny Corbino, Max Grossman, Paul H. Hargrove, Steven Hofmeyr, Mathias Jacquelin, Amir Kamil, Brian van Straalen, Daniel Waters, "UPC++ v1.0 Programmer’s Guide, Revision 2022.9.0", Lawrence Berkeley National Laboratory Tech Report, September 30, 2022, LBNL 2001479, doi: 10.25344/S4QW26

UPC++ is a C++ library that supports Partitioned Global Address Space (PGAS) programming. It is designed for writing efficient, scalable parallel programs on distributed-memory parallel computers. The key communication facilities in UPC++ are one-sided Remote Memory Access (RMA) and Remote Procedure Call (RPC). The UPC++ control model is single program, multiple-data (SPMD), with each separate constituent process having access to local memory as it would in C++. The PGAS memory model additionally provides one-sided RMA communication to a global address space, which is allocated in shared segments that are distributed over the processes. UPC++ also features Remote Procedure Call (RPC) communication, making it easy to move computation to operate on data that resides on remote processes.

UPC++ was designed to support exascale high-performance computing, and the library interfaces and implementation are focused on maximizing scalability. In UPC++, all communication operations are syntactically explicit, which encourages programmers to consider the costs associated with communication and data movement. Moreover, all communication operations are asynchronous by default, encouraging programmers to seek opportunities for overlapping communication latencies with other useful work. UPC++ provides expressive and composable abstractions designed for efficiently managing aggressive use of asynchrony in programs. Together, these design principles are intended to enable programmers to write applications using UPC++ that perform well even on hundreds of thousands of cores.

Dan Bonachea, Paul H. Hargrove, An Introduction to GASNet-EX for Chapel Users, 9th Annual Chapel Implementers and Users Workshop (CHIUW 2022), June 10, 2022,

Have you ever typed "export CHPL_COMM=gasnet"? If you’ve used Chapel with multi-locale support on a system without "Cray" in the model name, then you’ve probably used GASNet. Did you ever wonder what GASNet is? What GASNet should mean to you? This talk aims to answer those questions and more. Chapel has system-specific implementations of multi-locale communication for Cray-branded systems including the Cray XC and HPE Cray EX lines. On other systems, Chapel communication uses the GASNet communication library embedded in third-party/gasnet. In this talk, that third-party will introduce itself to you in the first person.

Paul H. Hargrove, Dan Bonachea, Amir Kamil, Colin A. MacLean, Damian Rouson, Daniel Waters, "UPC++ and GASNet: PGAS Support for Exascale Apps and Runtimes (ECP'22)", Poster at Exascale Computing Project (ECP) Annual Meeting 2022, May 5, 2022,

We present UPC++ and GASNet-EX, distributed libraries which together enable one-sided, lightweight communication such as arises in irregular applications, libraries and frameworks running on exascale systems.

UPC++ is a C++ PGAS library, featuring APIs for Remote Procedure Call (RPC) and for Remote Memory Access (RMA) to host and GPU memories. The combination of these two features yields performant, scalable solutions to problems of interest within ECP.

GASNet-EX is PGAS communication middleware, providing the foundation for UPC++ and Legion, plus numerous non-ECP clients. GASNet-EX RMA interfaces match or exceed the performance of MPI-RMA across a variety of pre-exascale systems.

John Bachan, Scott B. Baden, Dan Bonachea, Max Grossman, Paul H. Hargrove, Steven Hofmeyr, Mathias Jacquelin, Amir Kamil, Brian van Straalen, Daniel Waters, "UPC++ v1.0 Programmer’s Guide, Revision 2022.3.0", Lawrence Berkeley National Laboratory Tech Report, March 2022, LBNL 2001453, doi: 10.25344/S41C7Q

UPC++ is a C++ library that supports Partitioned Global Address Space (PGAS) programming. It is designed for writing efficient, scalable parallel programs on distributed-memory parallel computers. The key communication facilities in UPC++ are one-sided Remote Memory Access (RMA) and Remote Procedure Call (RPC). The UPC++ control model is single program, multiple-data (SPMD), with each separate constituent process having access to local memory as it would in C++. The PGAS memory model additionally provides one-sided RMA communication to a global address space, which is allocated in shared segments that are distributed over the processes. UPC++ also features Remote Procedure Call (RPC) communication, making it easy to move computation to operate on data that resides on remote processes.

UPC++ was designed to support exascale high-performance computing, and the library interfaces and implementation are focused on maximizing scalability. In UPC++, all communication operations are syntactically explicit, which encourages programmers to consider the costs associated with communication and data movement. Moreover, all communication operations are asynchronous by default, encouraging programmers to seek opportunities for overlapping communication latencies with other useful work. UPC++ provides expressive and composable abstractions designed for efficiently managing aggressive use of asynchrony in programs. Together, these design principles are intended to enable programmers to write applications using UPC++ that perform well even on hundreds of thousands of cores.

Dan Bonachea, Amir Kamil, "UPC++ v1.0 Specification, Revision 2022.3.0", Lawrence Berkeley National Laboratory Tech Report, March 2022, LBNL 2001452, doi: 10.25344/S4530J

UPC++ is a C++ library providing classes and functions that support Partitioned Global Address Space (PGAS) programming. The key communication facilities in UPC++ are one-sided Remote Memory Access (RMA) and Remote Procedure Call (RPC). All communication operations are syntactically explicit and default to non-blocking; asynchrony is managed through the use of futures, promises and continuation callbacks, enabling the programmer to construct a graph of operations to execute asynchronously as high-latency dependencies are satisfied. A global pointer abstraction provides system-wide addressability of shared memory, including host and accelerator memories. The parallelism model is primarily process-based, but the interface is thread-safe and designed to allow efficient and expressive use in multi-threaded applications. The interface is designed for extreme scalability throughout, and deliberately avoids design features that could inhibit scalability.

2021

Daniel Waters, Colin A. MacLean, Dan Bonachea, Paul H. Hargrove, "Demonstrating UPC++/Kokkos Interoperability in a Heat Conduction Simulation (Extended Abstract)", Parallel Applications Workshop, Alternatives To MPI+X (PAW-ATM), November 2021, doi: 10.25344/S4630V

We describe the replacement of MPI with UPC++ in an existing Kokkos code that simulates heat conduction within a rectangular 3D object, as well as an analysis of the new code’s performance on CUDA accelerators. The key challenges were packing the halos in Kokkos data structures in a way that allowed for UPC++ remote memory access, and streamlining synchronization costs. Additional UPC++ abstractions used included global pointers, distributed objects, remote procedure calls, and futures. We also make use of the device allocator concept to facilitate data management in memory with unique properties, such as GPUs. Our results demonstrate that despite the algorithm’s good semantic match to message passing abstractions, straightforward modifications to use UPC++ communication deliver vastly improved performance and scalability in the common case. We find the one-sided UPC++ version written in a natural way exhibits good performance, whereas the message-passing version written in a straightforward way exhibits performance anomalies. We argue this represents a productivity benefit for one-sided communication models.

Amir Kamil, Dan Bonachea, "Optimization of Asynchronous Communication Operations through Eager Notifications", Parallel Applications Workshop, Alternatives To MPI+X (PAW-ATM), November 2021, doi: 10.25344/S42C71

UPC++ is a C++ library implementing the Asynchronous Partitioned Global Address Space (APGAS) model. We propose an enhancement to the completion mechanisms of UPC++ used to synchronize communication operations that is designed to reduce overhead for on-node operations. Our enhancement permits eager delivery of completion notification in cases where the data transfer semantics of an operation happen to complete synchronously, for example due to the use of shared-memory bypass. This semantic relaxation allows removing significant overhead from the critical path of the implementation in such cases. We evaluate our results on three different representative systems using a combination of microbenchmarks and five variations of the the HPCChallenge RandomAccess benchmark implemented in UPC++ and run on a single node to accentuate the impact of locality. We find that in RMA versions of the benchmark written in a straightforward manner (without manually optimizing for locality), the new eager notification mode can provide up to a 25% speedup when synchronizing with promises and up to a 13.5x speedup when synchronizing with conjoined futures. We also evaluate our results using a graph matching application written with UPC++ RMA communication, where we measure overall speedups of as much as 11% in single-node runs of the unmodified application code, due to our transparent enhancements.

Paul H. Hargrove, Dan Bonachea, Colin A. MacLean, Daniel Waters, "GASNet-EX Memory Kinds: Support for Device Memory in PGAS Programming Models", The International Conference for High Performance Computing, Networking, Storage, and Analysis (SC'21) Research Poster, November 2021, doi: 10.25344/S4P306

Lawrence Berkeley National Lab is developing a programming system to support HPC application development using the Partitioned Global Address Space (PGAS) model. This work includes two major components: UPC++ (a C++ template library) and GASNet-EX (a portable, high-performance communication library). This poster describes recent advances in GASNet-EX to efficiently implement Remote Memory Access (RMA) operations to and from memory on accelerator devices such as GPUs. Performance is illustrated via benchmark results from UPC++ and the Legion programming system, both using GASNet-EX as their communications library.

Katherine A. Yelick, Amir Kamil, Damian Rouson, Dan Bonachea, Paul H. Hargrove, UPC++: An Asynchronous RMA/RPC Library for Distributed C++ Applications (SC21), Tutorial at the International Conference for High Performance Computing, Networking, Storage, and Analysis (SC21), November 15, 2021,

UPC++ is a C++ library supporting Partitioned Global Address Space (PGAS) programming. UPC++ offers low-overhead one-sided Remote Memory Access (RMA) and Remote Procedure Calls (RPC), along with future/promise-based asynchrony to express dependencies between computation and asynchronous data movement. UPC++ supports simple/regular data structures as well as more elaborate distributed applications where communication is fine-grained and/or irregular. UPC++ provides a uniform abstraction for one-sided RMA between host and GPU/accelerator memories anywhere in the system. UPC++'s support for aggressive asynchrony enables applications to effectively overlap communication and reduce latency stalls, while the underlying GASNet-EX communication library delivers efficient low-overhead RMA/RPC on HPC networks.

This tutorial introduces UPC++, covering the memory and execution models and basic algorithm implementations. Participants gain hands-on experience incorporating UPC++ features into application proxy examples. We examine a few UPC++ applications with irregular communication (metagenomic assembler and COVID-19 simulation) and describe how they utilize UPC++ to optimize communication performance.

John Bachan, Scott B. Baden, Dan Bonachea, Max Grossman, Paul H. Hargrove, Steven Hofmeyr, Mathias Jacquelin, Amir Kamil, Brian van Straalen, Daniel Waters, "UPC++ v1.0 Programmer’s Guide, Revision 2021.9.0", Lawrence Berkeley National Laboratory Tech Report, September 2021, LBNL 2001424, doi: 10.25344/S4SW2T

UPC++ is a C++ library that supports Partitioned Global Address Space (PGAS) programming. It is designed for writing efficient, scalable parallel programs on distributed-memory parallel computers. The key communication facilities in UPC++ are one-sided Remote Memory Access (RMA) and Remote Procedure Call (RPC). The UPC++ control model is single program, multiple-data (SPMD), with each separate constituent process having access to local memory as it would in C++. The PGAS memory model additionally provides one-sided RMA communication to a global address space, which is allocated in shared segments that are distributed over the processes. UPC++ also features Remote Procedure Call (RPC) communication, making it easy to move computation to operate on data that resides on remote processes.

UPC++ was designed to support exascale high-performance computing, and the library interfaces and implementation are focused on maximizing scalability. In UPC++, all communication operations are syntactically explicit, which encourages programmers to consider the costs associated with communication and data movement. Moreover, all communication operations are asynchronous by default, encouraging programmers to seek opportunities for overlapping communication latencies with other useful work. UPC++ provides expressive and composable abstractions designed for efficiently managing aggressive use of asynchrony in programs. Together, these design principles are intended to enable programmers to write applications using UPC++ that perform well even on hundreds of thousands of cores.

Dan Bonachea, Amir Kamil, "UPC++ v1.0 Specification, Revision 2021.9.0", Lawrence Berkeley National Laboratory Tech Report, September 2021, LBNL 2001425, doi: 10.25344/S4XK53

UPC++ is a C++ library providing classes and functions that support Partitioned Global Address Space (PGAS) programming. The key communication facilities in UPC++ are one-sided Remote Memory Access (RMA) and Remote Procedure Call (RPC). All communication operations are syntactically explicit and default to non-blocking; asynchrony is managed through the use of futures, promises and continuation callbacks, enabling the programmer to construct a graph of operations to execute asynchronously as high-latency dependencies are satisfied. A global pointer abstraction provides system-wide addressability of shared memory, including host and accelerator memories. The parallelism model is primarily process-based, but the interface is thread-safe and designed to allow efficient and expressive use in multi-threaded applications. The interface is designed for extreme scalability throughout, and deliberately avoids design features that could inhibit scalability.

Paul H. Hargrove, Dan Bonachea, Max Grossman, Amir Kamil, Colin A. MacLean, Daniel Waters, "UPC++ and GASNet: PGAS Support for Exascale Apps and Runtimes (ECP'21)", Poster at Exascale Computing Project (ECP) Annual Meeting 2021, April 2021,

We present UPC++ and GASNet-EX, which together enable one-sided, lightweight communication such as arises in irregular applications, libraries and frameworks running on exascale systems.

UPC++ is a C++ PGAS library, featuring APIs for Remote Memory Access (RMA) and Remote Procedure Call (RPC). The combination of these two features yields performant, scalable solutions to problems of interest within ECP.

GASNet-EX is PGAS communication middleware, providing the foundation for UPC++ and Legion, plus numerous non-ECP clients. GASNet-EX RMA interfaces match or exceed the performance of MPI-RMA across a variety of pre-exascale systems

Dan Bonachea, Amir Kamil, "UPC++ v1.0 Specification, Revision 2021.3.0", Lawrence Berkeley National Laboratory Tech Report, March 31, 2021, LBNL 2001388, doi: 10.25344/S4K881

UPC++ is a C++11 library providing classes and functions that support Partitioned Global Address Space (PGAS) programming. The key communication facilities in UPC++ are one-sided Remote Memory Access (RMA) and Remote Procedure Call (RPC). All communication operations are syntactically explicit and default to non-blocking; asynchrony is managed through the use of futures, promises and continuation callbacks, enabling the programmer to construct a graph of operations to execute asynchronously as high-latency dependencies are satisfied. A global pointer abstraction provides system-wide addressability of shared memory, including host and accelerator memories. The parallelism model is primarily process-based, but the interface is thread-safe and designed to allow efficient and expressive use in multi-threaded applications. The interface is designed for extreme scalability throughout, and deliberately avoids design features that could inhibit scalability.

Dan Bonachea, GASNet-EX: A High-Performance, Portable Communication Library for Exascale, Berkeley Lab – CS Seminar, March 10, 2021,

- Download File: GASNet-2021-LBL-seminar-slides.pdf (pdf: 9.1 MB)

Partitioned Global Address Space (PGAS) models, pioneered by languages such as Unified Parallel C (UPC) and Co-Array Fortran, expose one-sided communication as a key building block for High Performance Computing (HPC) applications. Architectural trends in supercomputing make such programming models increasingly attractive, and newer, more sophisticated models such as UPC++, Legion and Chapel that rely upon similar communication paradigms are gaining popularity.

GASNet-EX is a portable, open-source, high-performance communication library designed to efficiently support the networking requirements of PGAS runtime systems and other alternative models in future exascale machines. The library is an evolution of the popular GASNet communication system, building on 20 years of lessons learned. We describe several features and enhancements that have been introduced to address the needs of modern runtimes and exploit the hardware capabilities of emerging systems. Microbenchmark results demonstrate the RMA performance of GASNet-EX is competitive with several MPI implementations on current systems. GASNet-EX provides communication services that help to deliver speedups in HPC applications written using the UPC++ library, enabling new science on pre-exascale systems.

2020

Katherine A. Yelick, Amir Kamil, Dan Bonachea, Paul H Hargrove, UPC++: An Asynchronous RMA/RPC Library for Distributed C++ Applications (SC20), Tutorial at the International Conference for High Performance Computing, Networking, Storage, and Analysis (SC20), November 10, 2020,

This tutorial introduces basic concepts and advanced optimization techniques of UPC++. We discuss the UPC++ memory and execution models and examine basic algorithm implementations. Participants gain hands-on experience incorporating UPC++ features into several application examples. We also examine two irregular applications (metagenomic assembler and multifrontal sparse solver) and describe how they leverage UPC++ features to optimize communication performance.

John Bachan, Scott B. Baden, Dan Bonachea, Max Grossman, Paul H. Hargrove, Steven Hofmeyr, Mathias Jacquelin, Amir Kamil, Brian van Straalen, "UPC++ v1.0 Programmer’s Guide, Revision 2020.10.0", Lawrence Berkeley National Laboratory Tech Report, October 2020, LBNL 2001368, doi: 10.25344/S4HG6Q