Pore models track reactions in underground carbon capture

September 25, 2014

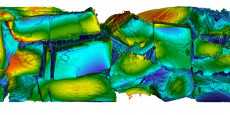

Computed pH on calcite grains at 1 micron resolution. The iridescent grains mimic crushed material geoscientists extract from saline aquifers deep underground to study with microscopes. Researchers want to model what happens to the crystals’ geochemistry when the greenhouse gas carbon dioxide is injected underground for sequestration. Image courtesy of David Trebotich, Lawrence Berkeley National Laboratory.

Using tailor-made software running on top-tier supercomputers, a Lawrence Berkeley National Laboratory team is creating microscopic pore-scale simulations that complement or push beyond laboratory findings.

The models of microscopic underground pores could help scientists evaluate ways to store carbon dioxide produced by power plants, keeping it from contributing to global climate change.

The models could be a first, says David Trebotich, the project’s principal investigator. “I’m not aware of any other group that can do this, not at the scale at which we are doing it, both in size and computational resources, as well as the geochemistry.” His evidence is a colorful portrayal of jumbled calcite crystals derived solely from mathematical equations.

The iridescent menagerie is intended to act just like the real thing: minerals geoscientists extract from saline aquifers deep underground. The goal is to learn what will happen when fluids pass through the material should power plants inject carbon dioxide underground.

Lab experiments can only measure what enters and exits the model system. Now modelers would like to identify more of what happens within the tiny pores that exist in underground materials, as chemicals are dissolved in some places but precipitate in others, potentially resulting in preferential flow paths or even clogs.

Geoscientists give Trebotich’s group of modelers microscopic computerized tomography (CT, similar to the scans done in hospitals) images of their field samples. That lets both camps probe an anomaly: reactions in the tiny pores happen much more slowly in real aquifers than they do in laboratories.

Going deep

Deep saline aquifers are underground formations of salty water found in sedimentary basins all over the planet. Scientists think they’re the best deep geological feature to store carbon dioxide from power plants.

But experts need to know whether the greenhouse gas will stay bottled up as more and more of it is injected, spreading a fluid plume and building up pressure. “If it’s not going to stay there (geoscientists) will want to know where it is going to go and how long that is going to take,” says Trebotich, who is a computational scientist in Berkeley Lab’s Applied Numerical Algorithms Group.

He hopes their simulation results ultimately will translate to field scale, where “you’re going to be able to model a CO2 plume over a hundred years’ time and kilometers in distance.” But for now his group’s focus is at the microscale, with attention toward the even smaller nanoscale.

At such tiny dimensions, flow, chemical transport, mineral dissolution and mineral precipitation occur within the pores where individual grains and fluids commingle, says a 2013 paper Trebotich coauthored with geoscientists Carl Steefel (also of Berkeley Lab) and Sergi Molins in the journal Reviews in Mineralogy and Geochemistry.

These dynamics, the paper added, create uneven conditions that can produce new structures and self-organized materials – nonlinear behavior that can be hard to describe mathematically.

Modeling at 1 micron resolution, his group has achieved “the largest pore-scale reactive flow simulation ever attempted” as well as “the first-ever large-scale simulation of pore-scale reactive transport processes on real-pore-space geometry as obtained from experimental data,” says the 2012 annual report of the lab’s National Energy Research Scientific Computing Center (NERSC).

The simulation required about 20 million processor hours using 49,152 of the 153,216 computing cores in Hopper, a Cray XE6 that at the time was NERSC’s flagship supercomputer.

“As CO2 is pumped underground, it can react chemically with underground minerals and brine in various ways, sometimes resulting in mineral dissolution and precipitation, which can change the porous structure of the aquifer,” the NERSC report says. “But predicting these changes is difficult because these processes take place at the pore scale and cannot be calculated using macroscopic models.

“The dissolution rates of many minerals have been found to be slower in the field than those measured in the laboratory. Understanding this discrepancy requires modeling the pore-scale interactions between reaction and transport processes, then scaling them up to reservoir dimensions. The new high-resolution model demonstrated that the mineral dissolution rate depends on the pore structure of the aquifer.”

Trebotich says “it was the hardest problem that we could do for the first run.” But the group redid the simulation about two-and-a-half times faster in an early trial of Edison, a Cray XC-30 that succeeded Hopper. Edison, Trebotich says, has larger memory bandwidth.

Rapid changes

Generating 1-terabyte data sets for each microsecond time step, the Edison run demonstrated how quickly conditions can change inside each pore. It also provided a good workout for the combination of interrelated software packages the Trebotich team uses.

The first, Chombo, takes its name from a Swahili word meaning “toolbox” or “container” and was developed by a different Applied Numerical Algorithms Group team. Chombo is a supercomputer-friendly platform that’s scalable: “You can run it on multiple processor cores, and scale it up to do high-resolution, large-scale simulations,” he says.

Trebotich modified Chombo to add flow and reactive transport solvers. The group also incorporated the geochemistry components of CrunchFlow, a package Steefel developed, to create Chombo-Crunch, the code used for their modeling work. The simulations produce resolutions “very close to imaging experiments,” the NERSC report said, combining simulation and experiment to achieve a key goal of the Department of Energy’s Energy Frontier Research Center for Nanoscale Control of Geologic CO2.

Now Trebotich’s team has three huge allocations on DOE supercomputers to make their simulations even more detailed. The Innovative and Novel Computational Impact on Theory and Experiment (INCITE) program is providing 80 million processor hours on Mira, an IBM Blue Gene/Q at Argonne National Laboratory. Through the Advanced Scientific Computing Research Leadership Computing Challenge (ALCC), the group has another 50 million hours on NERSC computers and 50 million on Titan, a Cray XK78 at Oak Ridge National Laboratory’s Leadership Computing Center. The team also held an ALCC award last year for 80 million hours at Argonne and 25 million at NERSC.

With the computer time, the group wants to refine their image resolutions to half a micron (half of a millionth of a meter). “This is what’s known as the mesoscale: an intermediate scale that could make it possible to incorporate atomistic-scale processes involving mineral growth at precipitation sites into the pore scale flow and transport dynamics,” Trebotich says.

Meanwhile, he thinks their micron-scale simulations already are good enough to provide “ground-truthing” in themselves for the lab experiments geoscientists do.

This story was originally printed in ASCR Discovery: http://ascr-discovery.science.doe.gov/bigiron/trebotich1.shtml

About Berkeley Lab

Founded in 1931 on the belief that the biggest scientific challenges are best addressed by teams, Lawrence Berkeley National Laboratory and its scientists have been recognized with 16 Nobel Prizes. Today, Berkeley Lab researchers develop sustainable energy and environmental solutions, create useful new materials, advance the frontiers of computing, and probe the mysteries of life, matter, and the universe. Scientists from around the world rely on the Lab’s facilities for their own discovery science. Berkeley Lab is a multiprogram national laboratory, managed by the University of California for the U.S. Department of Energy’s Office of Science.

DOE’s Office of Science is the single largest supporter of basic research in the physical sciences in the United States, and is working to address some of the most pressing challenges of our time. For more information, please visit energy.gov/science.