Berkeley Lab Computational Scientists to Help Illuminate Dark Universe

New Project Will Probe 'Unknown 95 Percent'

September 5, 2012

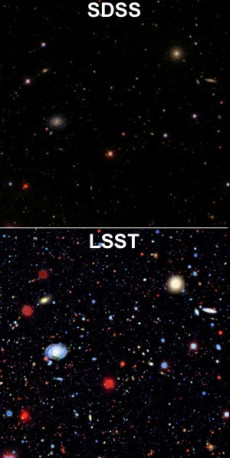

These images highlight the remarkable advance in depth and resolution of the Large Synoptic Survey Telescope (LSST) compared to today’s observations from the Sloan Digital Sky Survey (SDSS): In only one night LSST will capture data equivalent to five years of SDSS observing.

As part of a new Department of Energy collaboration aimed at illuminating the 95 percent of the universe known as dark matter and dark energy, researchers in Berkeley Lab’s Computational Research Division will apply their scientific computing expertise in simulation and analysis to boost the success of next-generation cosmology experiments.

Peter Nugent, co-leader of the Computational Cosmology Center, and Ann Almgren, an applied mathematician in the Center for Computational Sciences and Engineering (CCSE), are the leads for Berkeley Lab’s participation in the three-year project called Computation-Driven Discovery for the Dark Universe as part of DOE’s Scientific Discovery though Advanced Computing (SciDAC) program. The Berkeley Lab component, which also includes Martin White and Joanne Cohn of the Physics Division and Zarija Lukic, a postdoctoral researcher, will be funded at $1.553 million of the total $5.314 million budgeted for the project.

“We had all the tools, but we never tried to do a large-scale structure simulation of the universe before.” – Peter Nugent, co-leader of the Computational Cosmology Center

The project will be led by Salman Habib of the High Energy Physics and the Mathematics and Computer Science Divisions at Argonne National Laboratory. A total of six DOE labs will collaborate in the project.

Over the past 20 years, cosmologists have made great strides in studying the composition of our universe. With data collected during two decades of surveying the sky, scientists have developed the celebrated Cosmological Standard Model. But the picture is far from complete. Two of the model’s key pillars, dark energy and dark matter, which together account for 95 percent of the mass-energy of the Universe, remain mysterious.

Deep fundamental questions demand answers: What is dark matter made of? Why is the Universe’s expansion rate accelerating? Should the theory of general relativity be modified? What is the nature of primordial fluctuations? What is the exact geometry of the Universe?

To address these burning questions, the Office of High Energy Physics and the Office of Advanced Scientific Computing Research in the Department of Energy’s Office of Science agreed to jointly fund the five-year project. DOE is leading or participating in a number of research surveys to learn more about dark energy, and this SciDAC project will help researchers get more science out of their survey data, Nugent said.

“We had all the tools, but we never tried to do a large-scale structure simulation of the Universe before,” Nugent said. “But we could see that this was the direction that DOE cosmology experiments were heading. So we decided to try this – and it worked. We submitted our first paper in July, and have several more on the way.”

Simulations to Support Observations

Among the programs that will benefit from the project are the current Baryon Oscillation Spectroscopic Survey (BOSS), which is part of Sloan Digital Sky Survey (SDSS). Future projects include the Big Baryon Oscillation Spectroscopic Survey (BigBOSS), an international collaborative project led by Berkeley Lab which aims to collect up to 20 million galaxy spectra to further constrain the nature of Dark Energy; Euclid, a European Space Agency mission; and the Large Synoptic Survey Telescope, a joint DOE/National Science Foundation project. Both of the latter efforts will work to gather wide-field survey data in the infrared and optical that scientists hope will reveal fundamental properties of dark matter and dark energy. Each night, LSST will capture data equivalent to what the current SDSS captures in five years of observations.

Finally, the Dark Energy Survey (DES) is designed to probe the origin of the accelerating universe and help uncover the nature of dark energy by measuring the 14-billion-year history of cosmic expansion with high precision. More than 120 scientists from 23 institutions in the United States, Spain, the United Kingdom, Brazil, and Germany are working on the project.

Ann Almgren

Peter Nugent

Using Nyx, a new simulation code developed by CCSE and named after the Greek goddess of the night, the LBNL team will simulate gas and dark matter in a huge chunk of space – about 500 million light years on each side. The resolution will be fine enough to identify individual galaxies (the Milky Way is about 100,000 light years across). The high resolution enabled by Nyx will allow the team to study the low-density gas found between galaxies.

A key target of the project is simulating and understanding what is known as the Lyman-alpha forest, a series of plotted lines showing the spectrum of light waves from distant quasars as they are absorbed by hundreds or thousands of intervening low-density clouds of gas. As this light passes through these clouds, some of the light energy may be absorbed, which can indicate the presence of hydrogen and free electrons in space. When certain clouds produce more numerous absorption lines on the plotted graph, the lines appear denser and are referred to as a “forest.” This is of particular interest for cosmology as these clouds act as tracers of the structure of the universe. Since measurements are made at different points in time, due to the finite speed of light, one can trace the evolution of structure in the universe, which is directly related to the cosmological parameters. Projects like BOSS and BigBOSS are designed to measure hundreds of thousands of quasar spectra.

“Our simulations are designed to provide theoretical predictions for a variety of cosmological models. In addition, they will be able to replicate aspects of the experimental surveys and determine where they could go wrong,” Nugent said. “This is one area where you really need simulation to figure out how to correct systematic biases in the survey. It should tell us how well we did our observations.”

Computing Billions and Billions of Grid Cells

Running the simulations will generate massive datasets on some of DOE’s most powerful supercomputers. The team plans to conduct their initial runs on Hopper, a 153,216-processor-core Cray XE6, using most of the total system, which is located at the National Energy Research Scientific Computing Center (NERSC). Those runs will be 3D simulations with 4,096 grid cells on each side, nearly 69 billion cells in all, and generating about 15 terabytes of data per time step. Over the course of a weekend, they expect to simulate the history of the universe in this large box.

In the following year, the team is looking to run even higher resolution simulations, with 8,096 cells per side, or more than 530 billion grid cells. These runs are each expected to generate 100 terabytes of data. To handle simulations of this scale, the team is planning to use Titan, a Cray supercomputer at the Oak Ridge Leadership Computing Facility.

With such large jobs, the team faces the risk of being overwhelmed with data. To address some of the data issues, they are working with the Scalable Data Management, Analysis, and Visualization (SDAV) Institute, another SciDAC project, to develop ways to cull out the critical data as the simulations are running.

To visualize the data, they are also working with Hari Krishnan of the Computational Research Division, who will develop and manage the interfaces between Nyx and two leading scientific visualization tools: "yt," which is used for data analysis and visualization by the astrophysical community, and VisIt, an interactive parallel visualization and graphical analysis tool for viewing scientific data, developed by DOE’s Advanced Simulation and Computing Initiative.

About Berkeley Lab

Founded in 1931 on the belief that the biggest scientific challenges are best addressed by teams, Lawrence Berkeley National Laboratory and its scientists have been recognized with 16 Nobel Prizes. Today, Berkeley Lab researchers develop sustainable energy and environmental solutions, create useful new materials, advance the frontiers of computing, and probe the mysteries of life, matter, and the universe. Scientists from around the world rely on the Lab’s facilities for their own discovery science. Berkeley Lab is a multiprogram national laboratory, managed by the University of California for the U.S. Department of Energy’s Office of Science.

DOE’s Office of Science is the single largest supporter of basic research in the physical sciences in the United States, and is working to address some of the most pressing challenges of our time. For more information, please visit energy.gov/science.