Spark Performance on Cray XC with Lustre

Frameworks such as Hadoop and Spark provide a high level and productive programming interface for large scale data processing and analytics. Through specialized runtimes they attain good performance and resilience on data center systems for a robust ecosystem of application specific libraries. This combination resulted in widespread adoption that continues to open new problem domains.

As multiple science fields have started to use analytics for filtering results between coupled simulations (e.g. materials science or climate) or extracting interesting features from high throughput observations (e.g. telescopes, particle accelerators), there exists plenty incentive for the deployment of the existing large scale data analytics tools on High Performance Computing systems. Yet, most solutions are ad-hoc and data center frameworks have not gained traction in our community. In the following we provide a short report our experiences porting and scaling Spark on two current very large scale Cray XC systems (Edison and Cori), deployed in production at National Energy Research Scientific Computing Center (NERSC).

In a distributed data center environment disk I/O is optimized for latency by using local disks and the network between nodes nodes is optimized primarily for bandwidth. In contrast, HPC systems use a global parallel file system, with no local storage: disk I/O is optimized primarily for bandwidth, while the network is optimized for latency. Our initial expectation, was that after porting Spark to Cray, we can then couple large scale simulations using O(10,000) cores, benchmark and start optimizing it to exploit the strengths of HPC hardware: low latency networking and tightly coupled global name spaces on disk and in memory.

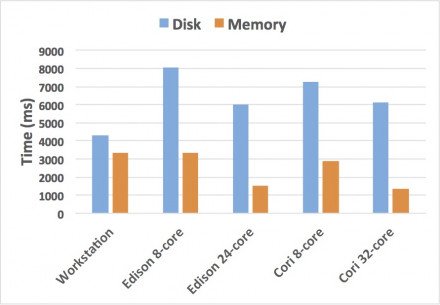

We ported Spark to run on the Cray XC family in Extreme Scalability Mode (ESM) and started by calibrating single node performance when using the Lustre global file system against that of an workstation with local SSDs: in this configuration a Cray node performed up to 4X slower than the workstation.

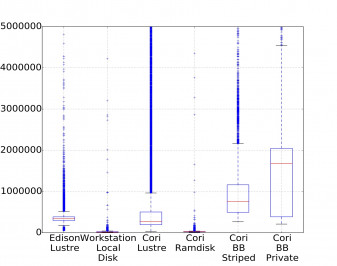

Unlike clouds, where due to the presence of local disks Spark shuffle performance is dominated by the network, file system metadata performance initially dominates on HPC systems. Perhaps expected by parallel I/O experts [19], the determining performance factor is the file system metadata latency (e.g. occurring in fopen), rather than the latency or bandwidth of read or write operations. We found the magnitude of this problem surprising, even at small scale. Scalability of Spark when using the back-end Lustre file system is limited to O(100) cores.

|

File Open time. |

|

After instrumenting Spark and the domain libraries evaluated (Spark SQL, GraphX), the conclusion was that a solution has to handle both high level domain libraries (e.g. Parquet data readers or application input stage) and the Spark internals. We calibrated single node performance, then we performed strong and weak scaling studies on both systems. We evaluate software techniques to alleviate the single node performance gap in the presence of a parallel file system:

- First and most obvious configuration is to use a local file system, in main memory or mounted to a single Lustre file, to handle the intermediate results generated during the computation. While this configuration does not handle the application level I/O, it improves performance during the Map and Reduce phases and a single Cray node can match the workstation performance. This configuration enables scaling up to 10,000 cores and beyond, for more details see the paper.

- As the execution during both application initialization and inside Spark opens the same file multiple times, we explore “caching” solutions to eliminate file metadata operations. We developed a layer to intercept and cache file metadata operations at both levels. A single Cray node with pooling also matches workstation performance and overall we see scalability up to 10,000 cores. Combining pooling with local file systems also improves performance (up to 15%) by eliminating system calls during execution.

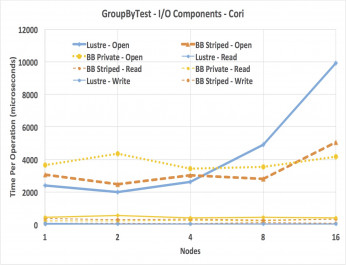

|

On Cori we also evaluate a layer of non-volatile storage (BurstBuffer) that sits between the processors’ memory and the parallel file system, specifically designed to accelerate I/O performance. Performance when using it is better than Lustre (by 3.5X on 16 nodes), but slower than RAM- backed file systems (by 1.2X), for GroupBy, a metadata-heavy benchmark. The improvements come from lower fopen latency, rather than read/write latency. With BurstBuffer we can scale Spark only up to 1,200 cores. As this hardware is very new and not well tuned, we expect scalability to improve in the near future.

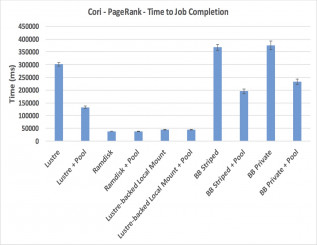

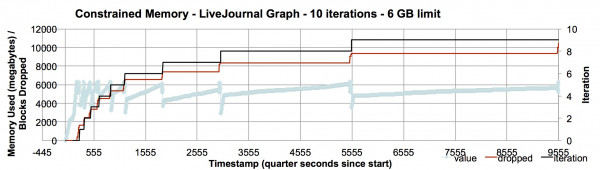

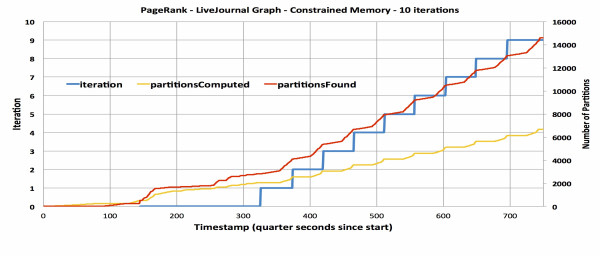

Besides metadata latency, file system access latency in read and write operations may limit scalability. In our study, this became apparent when examining iterative algorithms. As described in the paper, the Spark implementation of PageRank did not scale when solving problems that did not fit inside the node’s main memory. The problem was the interplay between resilience mechanisms and block management inside the shuffle stage in Spark, that generated a number of I/O requests that increased exponentially with iterations. This overwhelmed the centralized storage system. We fixed this particular case at the algorithmic level, but a more generic approach is desirable to cover the space of iterative methods.

Overall, our study indicates that scaling data analytics frameworks on HPC systems is likely to become feasible in the near future: a single HPC style architecture can serve both scientific and data intensive workloads. The solution requires a combination of hardware support, systems software configuration and (simple) engineering changes to Spark and application libraries. Metadata performance is already a concern for scientific workloads and HPC center operators are happily throwing more hardware at the problem. Hardware to increase the node local storage with large NVRAM will decrease both metadata and file access overhead through better caching close to the processors. Orthogonal software techniques, such the ones evaluated in this paper, can further reduce metadata impact. An engineering audit of the application libraries and the Spark internals will also eliminate many root causes of performance bottlenecks.